Muhammad Safwan, Zain ul Abidin and Saud Ahmed

Supervised by: Dr. Maria Waqas, Assistant Professor, Computer and Information systems Engineering Department, NEDUET

CHAPTER 1 – INTRODUCTION

Introduction

With the continuous development of the economy, the Vehicle has become an indispensable tool for our daily life; due to this, we are moving towards a highly dense situation where multiple problems strike back that need to be solved.

We are focusing on parking systems in which the management uses the counter at the checkpoint to track the number of vehicles entering and exiting; In Pakistan, the hardware approach is being used in many parking areas to detect the exact space for parking. In the modern era, vehicles have their parking systems installed, but it is still hard for the system to confirm whether a vacant parking area truly exists; to overcome this problem, we have coined this idea of our project.

Mainly speaking, in NED University Parking Area, the guards and the parking management guided the students on whether the parking space was vacant or occupied. That takes a lot of time and causes many others to wait in a queue for their turns.

Parking Management System by image processing can help us out with a modern & Innovative solution for temporary parking places. No specific approach is used for parking a car, reducing the hustle at peak time, and users can get their cars parked righteously.

1.1 Beneficiaries

Manually monitoring every individual car is a hectic process for the management to check whether the car owner has properly parked the vehicle in a righteous location. In this regard, parking management may get benefits via a simple mobile application rather than visiting the actual place or posting someone, especially looking after this, which increases the cost and can be an additional burden on the finances of that institution. But with this simple application, this cost is reduced.

1.2 Literature Review

With the continuous development of the economy, vehicles have become indispensable tools in people’s daily life. However, solving the ‘Difficult Parking’ is now an emergent issue. Detecting the status of parking spaces in a parking lot is the most fundamental prerequisite in modern intelligent parking management and guidance systems.

An automated parking availability system detects the available space with certain areas of interest. Many researchers are trying their best to have an accurate system. The authors of on-vehicle video-based parking lot recognition with fisheye optics [1] proposed a visual-based free parking space detecting method. However, they only focused on the simplest situations with only white parallel lines on the ground and without mentioning the image stitching approach. The authors of [2] proposed a surrounding view-based parking area detection and tracking algorithm. Still, the method only works when the ground is clean without too many sundries or too much reflection of light, like underground garages. In addition, the algorithm does not distinguish a vacancy, which will cause problems in practice.

The authors [3] introduced a complete system of using both a surround-view system and an ultrasonic method to obtain parking spaces and their availability. They paid more attention to the detection problem but neglected the image stitching part. In addition, the paper only focused on an indoor environment without demonstrating any results on the special ground with a much linear texture like brick stone ground.

1.3 Methodology

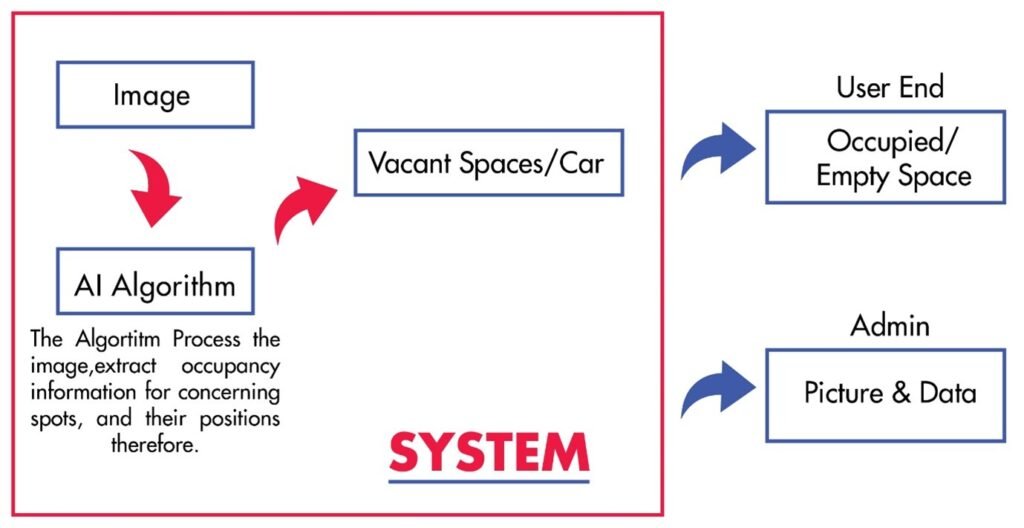

Our project’s aim is to detect and recognize the real-time vacant parking space. Our project comprises of:

1.3.1 Camera

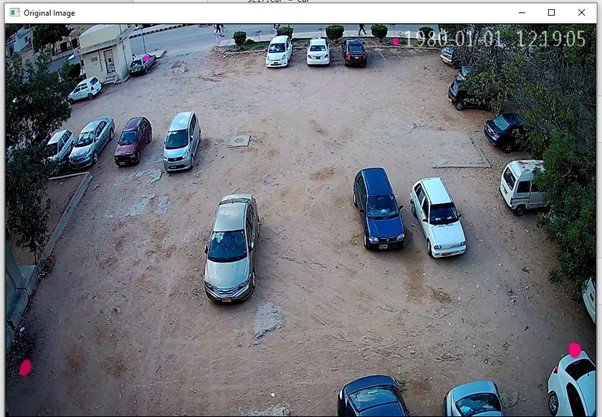

The camera, which is mounted on the rooftop of the book bank library at certain angles where it covers the maximum area of the parking lot, is being used for taking the input.

1.3.2 Car Detection Module

The car detection module detects the cars within ROI (Region of Interest). Neural Network is used to detect the car.

1.3.3 Parking Space Detection Module

This module will be able to track and detect the parking space in an image. This module will generate the virtual lines for parking, which will be visible to the user on an app that will help the user to park the vehicle. Due to human error, if someone parked the vehicle between the lines or parked the vehicle in the wrong manner, the output will be shown on the admin side of the application with the count of a wronged parked car.

1.3.4 Admin Module to Start the process

The admin module is used to start up the process by having a random selection of images from the dataset which will be processed. The processed image will be saved into the Realtime Firebase database. The Admin module is made using Tkinter.

1.3.5 User Application

Whenever the user opens the application, he will be able to see the image of the parking lot and the number of vacant spaces in the parking lot. In order to provide ease to the user, a help screen is provided, which tells the user how to check out the status of the parking lot.

1.3.6 Admin Application

The Admin application has extensive data where the admin can see the total number of parking slots, the total number of cars, vacant space, occupied space, and No. Of incorrectly parked cars.

1.4 Block Diagram

1.5 Tools

The following tools were used during the project:

1.5.1 Python

Python Programming language is used to train the models and do the processing.

1.5.2 Android Studio

Android Studio is used to develop the mobile application for the user and the admin.

1.5.3 Firebase

The Firebase is used for the real-time database. The image is stored along with other information like the total number of parking slots, the vacant parking slot, the occupied slot, and the number of cars correctly parked or wrongly parked.

1.5.4 Vgg Annotator

In order to train our model, data need to be annotated. We used Vgg Annotator to annotate our data.

CHAPTER 2 – Software Design

This chapter is a brief overview of the project Vehicle Parking Management System by image processing with the help of a background/ literature overview, the scope of the project, and the end product.

2.1 Project Scope

2.1.1 Description

Vehicle Parking Management System by image processing aimed to create a better environment for a vision-based vacancy parking area detection, Which can help us with the modern & Innovative solution for temporary parking places.

2.1.2 Motivation

In our project, we introduced a vision-based vacancy parking area detection. This solution is for temporary parking places; For Example, Dust ground cemented flooring where no specific parking systems are used. In our Project, we have focused NED University Parking Area in order to decrease the hustle at peak times for the students, parking management, and the Administration. Our prime objective is to have the maximum number of cars that can be parked in a temporary lot.

2.2 Functional Requirements

Function 1 | Get Started (User) |

Input | The user launched the mobile application. |

| Output | Home screen |

Function 2 | Get Started (Admin) |

Input | Admin launched the admin application |

Output | login in the form will appear |

Function 3 | Log Out (Admin) |

Input | Whenever the admin presses the key to logout |

| Output | The admin application will be closed |

| Processing | Exits all the background processes |

Function 4 | Credit Screen (User) |

Input | Whenever the user presses the credit button |

Output | The credits screen will appear where the user can see the maker and details |

| Processing | All background actions will be closed and will be directed toward the new page |

| Function 5 | Reset Password (Admin) |

| Input | Whenever the user presses the reset password button |

| Output | A new password window will be prompt |

| Processing | It will override the old password and update the new password. |

2.3 Performance Requirements

Performance is one of the essential measures, where the requirements define acceptable response time for system functionality, the better the device, the better performance. The following are the performance requirements for the application:

- Our start application should be loaded within five seconds.

- The application should be user-friendly.

- The processing time, from taking the input to displaying the output not more than two seconds.

- There must be authentication for admin.

2.4 Safety & Security

There is no such critical security required, the only authentication we need is on the admin side.

2.5 Software Quality Attributes

2.5.1 Reliability

The software can perform intended functions under specific conditions without experiencing system failure. Our application is made compatible and user-friendly so that it can run smoothly on any device.

2.5.2 Usability

Usability is one of the essential things in order to facilitate the user; in order to increase usability, we have to make sure the application is user-friendly.

2.5.3 Efficiency

Efficiency is how fast the application responds to the user and the power it consumes.

2.5.4 Correctness

Correctness is how a user can interact with the software and how the application responds when the software is used correctly.

2.5.5 Maintainability

Maintainability is how frequently we can cater to the problem faced in the mobile application of the parking management system.

CHAPTER 3 – Data Processing

3.1 Data Collection

Data collection is one of the most fundamental prerequisites in machine learning and artificial intelligence so that the collected data can be used for data analysis to find recurring patterns. From those recurring patterns, we can build predictive models using machine learning algorithms that look for trends and detect vacant and occupied spaces.

The collection of data isn’t an easy task; in order to collect the data written application was forwarded to Chief Librarian to grant us permission to mount the V380 bullet camera on the rooftop of NED University Book Bank Library so that we can cover the temporary parking area, which is near to Ali Photostat which was later approved by administration. In order to have a quality resolution and large coverage area, we have used the V380 WIFI Bullet Camera.

Following features of the V380 WIFI bullet camera; It can be accessed remotely via an Android mobile phone from which we captured pictures for the collection of data. The maximum data we achieved in a day was about two to three unique pictures as most of the cars used to be in the same place for a full day.

3.2 COVID-19 Outbreak

The COVID outbreak was called on 29th February 2020; vacations were extended to control the situation. The new deadly and contagious virus impacted worldwide. Likewise, it started creating an impact on Pakistan, and Govt. announced a complete lockdown in March 2020, which led us towards distress.

Before the lockdown, we had a limited number of unique images, which wasn’t enough to create to train our data.

From physical meetings to online meetings, we decided to recreate our data set using old images as we have no more access to the camera due to the lockdown situation; We used Adobe Photoshop to create the data, which looks approximately realistic and unique. Recreating images wasn’t easy when it came to having measured with unique parameters. Every single recreation of the image takes approximately an hour to get completed.

In recreation, we have changed the location of the car, pasted some new cars, removed some cars, and placed marks on corners.

3.3 Data Filtering

Data we collected and recollected are passed through the data filtering process, where the approximately same images and the images that can’t be used are filtered out. The remaining data was ready to be processed.

3.4 Data Annotation

Once we have calculated and filtered out the data, we need to label it because a labeled data set is required for a supervised machine learning algorithm so the machine can easily understand the input patterns. We need to annotate the data set to train our model to detect the car. We used the VGG annotator to annotate our data.

CHAPTER 4 – Workflow

4.1 Data Training

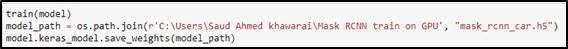

Till now, we have collected the data, recreated the data, filtered out the data, and annotate the data. Now our data is ready for training the neural network.

There are many kinds of neural networks used according to the scope of a project, like Convolution Networks CNN, and Recurrent Neural Networks (RNN). The Neural Network we are using is Mask Regional Convolution Neural Network (Mask RCNN).

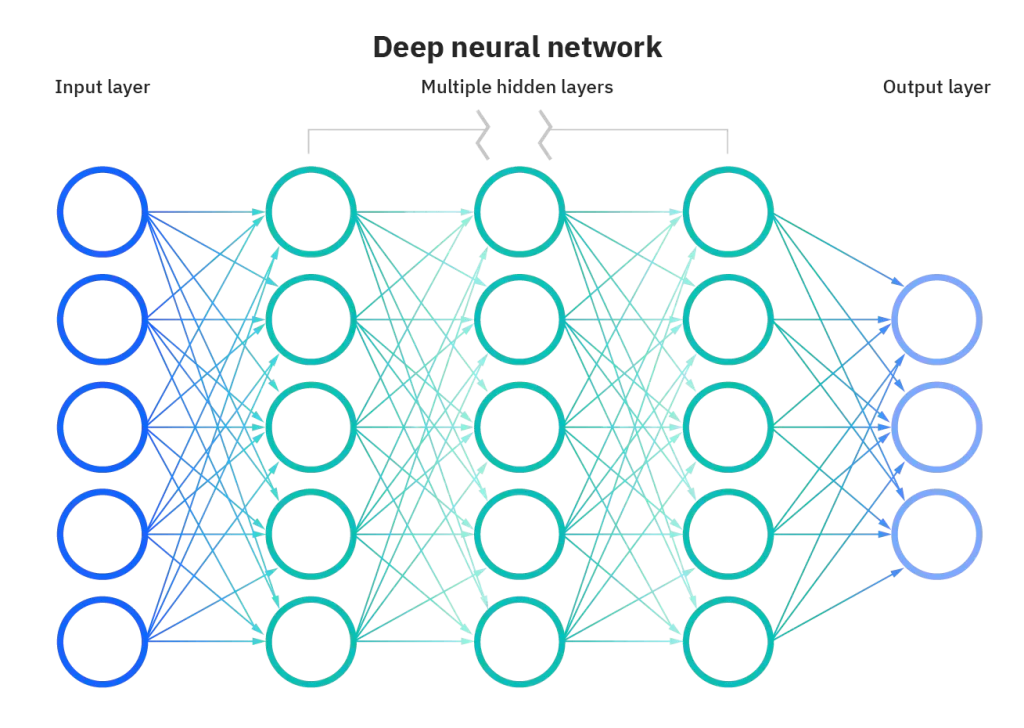

4.1.1 Convolutional Neural Network

A Convolutional Neural Network (CNN) is a subclass of a Deep Neural Network, mostly used to analyze visual Imaginary. It’s a multilayer perceptron, meaning they are fully connected networks in which every neuron of one layer is connected to all next-layer neurons.

CNN consists of input and output and many hidden layers. The hidden layers of a CNN may consist of numerous convolutional layers that convolve with multiplication or other dot product. The activation function is commonly a RELU layer. It is followed by additional convolutions such as pooling, fully connected, and normalization layers referred to as hidden layers because their inputs and outputs are masked by the activation function and final convolution. In turn, the final convolution often involves backpropagation to weigh the end product. [6]

4.1.2 Convolution

Convolution is a matrix-based filter that uses an image as the first matrix and the other matrix as a kernel that will be processed. The filter reads each and every pixel of the image, and for every pixel, it multiplies the value of this pixel and the values of the surrounding pixels by the kernel’s corresponding value and adds to the results, later the initial pixel is set with this result value in a new image.

Fig 4.2 Convolution

4.1.3 Pooling

Pooling is used to reduce the dimension of the data by combining the outputs of the neuron onto the next layer by taking the max. Pixel value from the input and map onto the output image.

4.1.4 Fully Connected

The principle is the same as a multi-layer perceptron neural network (MLP) where each neuron is fully connected to other neurons in the next layer. In order to classify the images flattened matrix goes through fully connected layers.

4.1.5 Dropout

Prone overfitting happens due to fully connected layers, as most of the parameters are covered. In order to reduce prone overfitting, in the training stage, individual nodes are either “dropped out” of the net with probability 1-p or kept with probability so that a reduced network is left; incoming and outgoing edges to a dropped-out node are also removed. The only reduced network can be trained at this process and later reinsert the removed nodes into the network with their original weights. [6]

4.1.6 Transfer Learning

Transfer learning is an optimization technique that provides a shortcut to save time and achieve better performance. Most pre-trained models use transfer learning which is based on large convolutional neural nets. Some people’s pre-trained models are VGGNet, ResNet, DenseNet, Google’s Inception, etc. Most of these networks are trained on ImageNet, which has a massive dataset with over 1 million labeled images in 1000 categories.

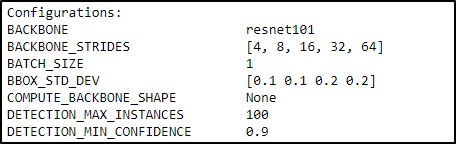

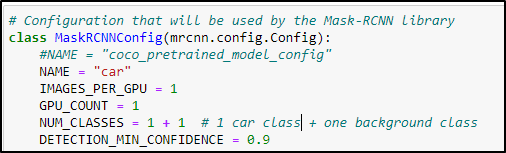

4.1.7 Mask RCNN

It is commonly known, for instance, as segmentation, which recognizes the pixel of the boundaries of the object. The Mask RCNN creates the bounding boxes and segmentation masks for each occurrence of an object in the image. It is based on Feature Pyramid Network (FPN), a ResNet101. Mask RCNN uses ResNet-101 Architecture as a backbone to extract the input image’s features. In Mask RCNN, the input image is forwarded to ResNet-101, where features are extracted and used as input for further layers. [7]

Mask RCNN is an upgraded version of Faster RCNN. Faster RCNN is commonly used for object detection in a certain image. For an input image, Faster RCNN returns the class label and bounding box coordinates for each object within the image. The Mask RCNN framework is built on top of Faster R-CNN. So, for an input image, Mask RCNN also returns the mask of the object in addition to the class label and bounding box coordinates for each class.

There are two stages of Mask RCNN. First, it creates region proposals where the object of a specific class can occur. Secondly, it predicts the class of the object, clarifies the bounding box, and generates a mask at the pixel level of the object based on the first stage proposal. Both stages are connected to the backbone structure. The backbone structure is ResNet-101 which we discussed earlier. In the first stage, a lightweight neural network called RPN scans all FPN, i.e., ResNet-101, and proposes regions that may contain objects. Step one is only to get a set of bounding boxes that could possibly contain an object of a specific class. In the second stage, another neural network takes proposed regions from the first stage, classifies the proposals, and generates bounding boxes and masks of object classes.

4.1.8 Why Mask RCNN

The difference between object detection algorithms and classification algorithms is that in detection algorithms, we have tried to draw a bounding box around the object of interest to locate it within ROI. Multiple bounding boxes represent different objects in the region of interest.

The major reason for the fully connected layer is the length of the output layer is variable, due to which we cannot proceed with the problem of building a convolutional network. The number of existences of an object within the ROI is not fixed, due to which a simple approach is proceeded to resolve the issue by taking different regions of interest from the image and using a CNN to classify the presence of the object within that region. The problem is we might have different spatial locations within the image and with different aspect ratios. Therefore, we have to select a huge number of regions which boosts the computationally cost. Therefore, algorithms like R-CNN, YOLO, etc., have been developed to find these occurrences and find objects quickly.

4.2 Region of Interest

The first challenge in detecting the parking space and car; was to detect the region of interest (ROI). In order to detect the Region of Interest, we placed pink marks at the corner of the images. The reason for using pink color was that there is rarely any pink car in the parking so we could easily detect the corners and make our ROI.

4.3 Boundary Detection

To detect the boundary, we had to detect the pink marker which we placed in the corner. The technique we used to detect the pink mark was color detection. First, we resized images to a specific size; all the images in the data set differed. Once the image was resized to a specific size, then the image was filtered with two different kernels.

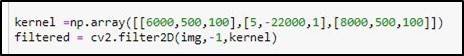

4.3.1 Kernel#1

CV2.filter2D is used to convolute the kernel, which is mentioned with the Image, which will filter out the unwanted noise from the original image. The pink marker we wanted to detect converted to a specific color. All other colors were converted to black or a noise.

4.3.2 Kernel#2

Following Kernel will enhance the color using the same Filtering process. Now the pink mark which we wanted to detect becomes smooth and easy to recognize compared to other colors.

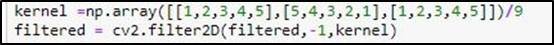

4.3.3 Gaussian Blur

After Convoluting from Both Kernel, the convoluted image is made through Gaussian Blur, where the image is made slightly blurred.

4.3.4 Masking Technique

Now our original image is converted to a filtered image. We detect the smooth and unique color of markers using the masking technique. We used CV2.Inrange function, which use to detect an object based on the range of pixel values in the HSV color space i.e., specific color, which we have added in the first step to detect the boundaries

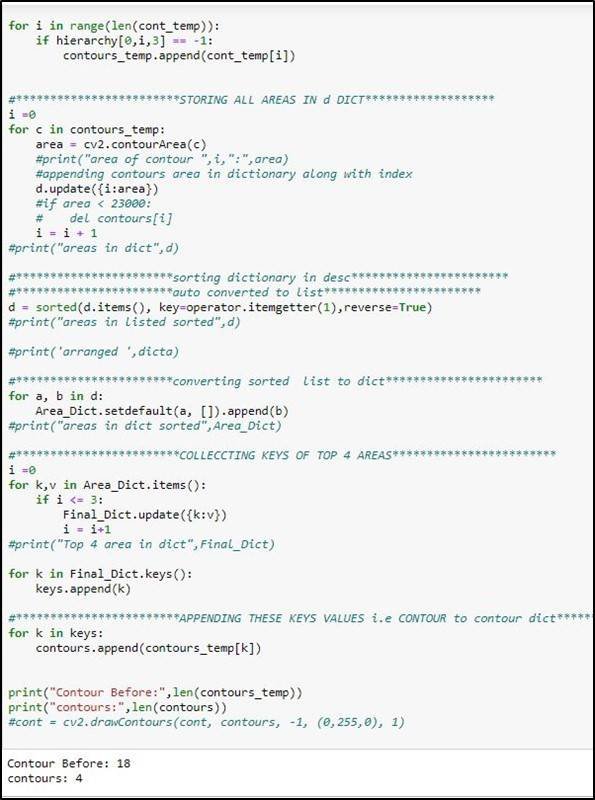

4.3.5 Contours

In order to find out the coordinates of the mask, we have to find out the contours, which can be defined as the line of pixels whose values are the same or, in other words, the edges in the image. Once we found the contours in our masked image and drew them, we observed many contours in the image, but we only needed the contour of the pink mark at the corner. So first, we get the coordinate of every contour in the image. After finding the coordinates, we calculated the area of every contour and compare them and stored them in descending order. After sorting, we kept only 4 contours at the start of the list and discarded others. In this way, we obtain the coordination of our marks at the corner.

These coordinates represent our ROI.

4.4 Changing the perspective

Since the Camera is mounted on to rooftop at some angle, due to which coordinates we achieved aren’t square; so we have to change the perspective to make it square because the car near the camera is of a different size compared to the car placed far from the camera.

4.5 Car Detection

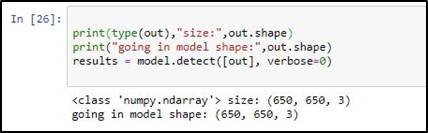

Once we had trained our model, we saved its weights in H5 format to detect a car in an image. The image which we created by changing perspective is fed to the neural network. We need to define the architecture of the model we use during the training process i.e., Mask RCNN.

The output of the model gave us the result, out of which we detected the boundary of every car. While defining the architecture to load the weights, we set the confidence value equal to 0.9 which means the object which has 90% confidence to be the car is detected while other object having confident less than 90% is not detected

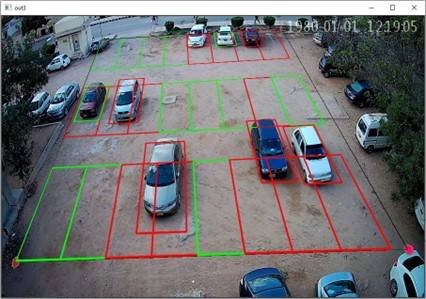

Fig 4.15 Detection of Car

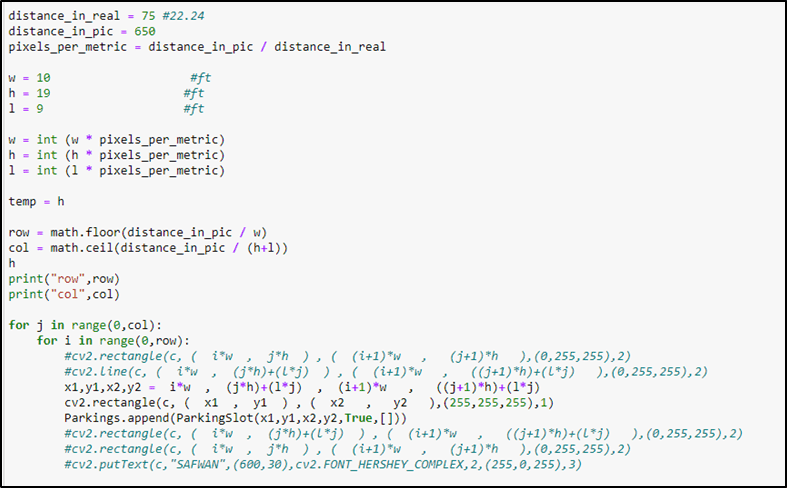

4.6 Drawing Imaginary Parking Lines

After detecting the car next, we must draw the parking lines in the image. For this, we use standard parking lot size i.e., 19ft by 10ft. since in the image, the unit of working is a pixel, and we have the distance in units of feet, so we need to change the unit from feet to pixel. For this conversion, we calculated the pixel per metric.

pixel per metric = distance in pic/distance in real

The distance in pic between ant 2 points is 650 pixels as we changed the perspective on the 650×650 image and the distance in real that we measured between the same 2 points was 75ft. now we used this pixel per matrix parameter to convert the standard parking lot size to the pixel value.

4.7 Finding Car Intersection

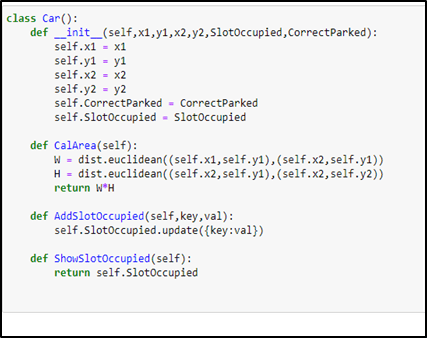

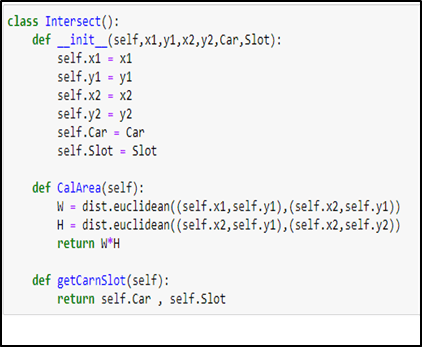

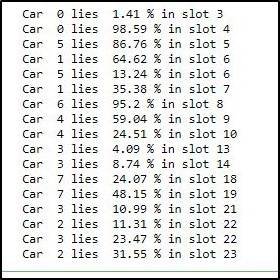

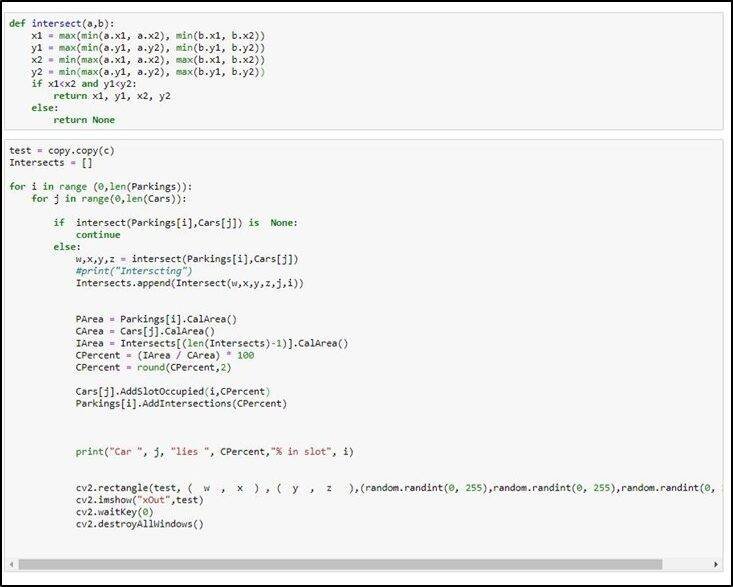

The Imaginary parking lines are made to achieve maximum parking. Since the cars are not parked correctly, we have to calculate the car area which resides in the parking slot.

To handle this, we defined 3 classes car Class, Parking Class, and Intersect class. The Car class contains the coordinate of the car, the Boolean, whether the car is correctly parked or not, and all values of all the car’s slots.

The parking Class contains the coordinate of the parking lot, Booleans value whether the parking slot is empty or not, and the percentage intersection of all the cars in that slot. The intersection class contains the coordinate of the intersection between any car and parking lot and the number of cars and parking lots that intersect.

After calculating the area covered by the cars, we checked the intersection of the car and the parking slot by using the following formula.

Intersection_Percent = Intersection_area / Car_area * 100

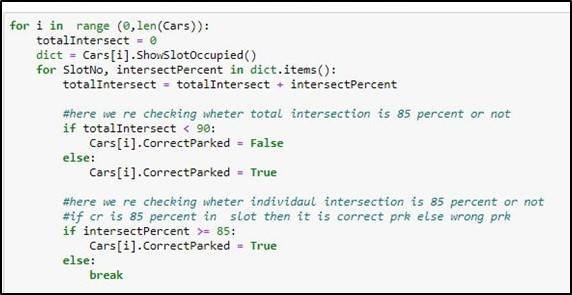

4.8 Car Parked Correctly or Not

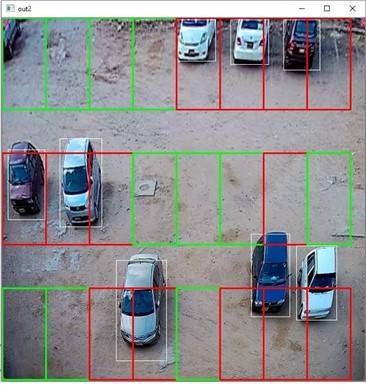

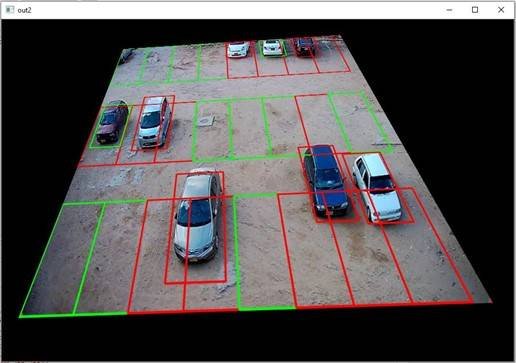

Once we have achieved the percentage of the intersection of the car with the parking lot, we have to define a rule which decides whether the car is parked Correctly or not whenever the intersection of the car with the parking slot is more than or equal to eighty-five percent than it will be marked as correctly parked otherwise wrongly parked. This rule is checked with the following code. Where Green box on the car describes it correctly parked, whereas the red box on the car describes wrongly parked.

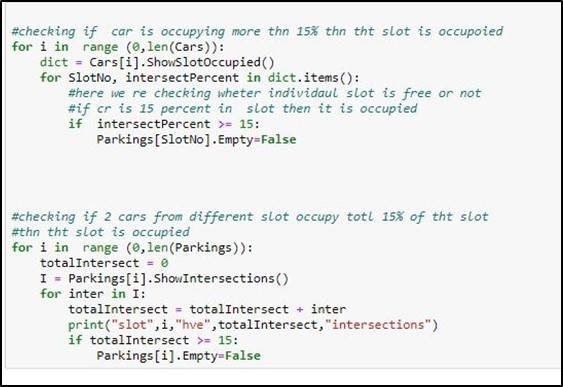

4.9 Parking Slot Vacant or Occupied

We achieved that whether the car is wrongly parked or not, we must ensure which parking slots are being utilized and which are still vacant. For that, we have defined another rule: if the parking slot is occupied by more than or equal to fifteen percent, then the Parking slot is made occupied or otherwise vacant. The red Color defines occupied while the green color represents Vacant space.

After all the calculations, the perspective of the image is changed to its original state so that the user can see the original view.

4.10 Firebase

Firebase is the most recent technology used as a backend for various applications. It makes them remarkable, compelling apparatuses that help structure the completed highlighted applications. It worked to help designers in cross-based applications with the highlights of setup, storage, and analysis. This storage can be used for some stockpiling points of confinement in addition to focal points of Real-time database, Web facilitating, Firestore storage, Messaging service, Authentication, Machine learning pack, service orders, and pointedly more. You can perform various complex work effectively with the highlights of this to assemble high caliber and sans bug requests for various work. You can utilize Firebase Authentication incorporation with your applications and gather pictures, sound, and video without utilizing server-side code [4].

4.10.1 Reason to use Firebase

Our project vehicle parking management system using image processing is a real-time project whose purpose is to facilitate the student, guests, staff, and faculty who visit NED University. After calculating the vacant spaces and position of cars, the main hurdle was to send this information to real-time users which every user has a different smartphone device. There can be many alternatives for the cloud which can be used to store the details, but the basic requirement of our project was to send data in real-time to the database and show it to the user. The main reason to use Firebase as a cloud was to use its main feature, “Real-time Database,” which helped us solve our main problem and store the details of the parking system.

4.10.2 Features of Firebase used

- Firebase Authentication

- Firebase Real-time Database

- Firebase Storage

4.10.3 Firebase Authentication

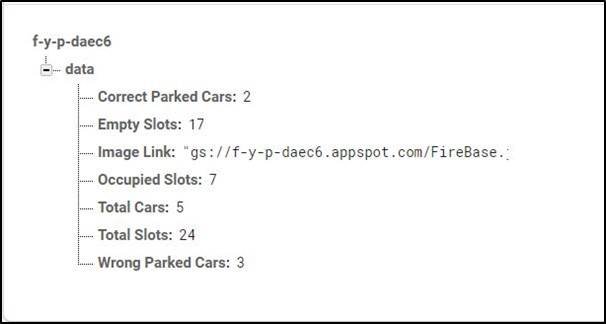

The task is to show the final output to the user and Admin while the admin can also see the other details which are

- Total Spaces

- Empty Spaces

- Occupied Space

- Total Cars

- Correct Parked Car

- Wrong Parked Car

So, the access the admin panel we need to authenticate the admin in firebase. Firebase use instant SDK’s and UI libraries for this client across application using email id, password, phone no etc. We have use email sign in method which is generally a normal used method for validation, this firebase validation service method helps us to verify user in real time and display only relevant details about his/her account.

4.10.4 Firebase Realtime Database

The firebase has another amazing feature called real-time database. When you build applications to run on different operating system with iOS, Android or JavaScript SDKs all the user or client shared the same linked of Real time database. So, the basic difference between the normal database and real time storage is that real time service does not depend on HTTP request like normal database to reflect any changes in the database or to fulfil the client response, this use synchronization every time is any request is made or any information changes the change will reflect in milliseconds. The data stored in JSON format which is a NoSQL format. In this database once connectivity is established Firebase application stay connected even when the offline because the Firebase locally stored the information offline in local storage [4].

Moreover, the Firebase has some efficient security methods in defining the user auth and privileges you can easily define new rules or manipulate the default rule in the rule section of real time database. The firebase database feature can be used for the following purposes [4]:

- Chatting

- Push Notifications

- News feed

Our Project is using firebase real time database with real time sync. Our application processes the real-time footage and updating the firebase database after every minute. These changes will be reflected to user end application in few seconds. The data is stored in typical JSON tree database.

The firebase database with real time sync provides high level security rules, this help in design the configuration of database and when data can be written and when can be read. When integrating the developer can give privileges to different people with different function and responsibilities. The real-time database API is designed to only allow operations that can be executed quickly, this enable developer to build amazing real time experience that can serve millions of users without compromising on responsiveness [4].

In firebase database on the valuing plan, you can support your application data which can be at high scale by splitting the data across multiple data instance in the same Firebase project thus Firebase real time database is scale across multiple databases, the Realtime database is the foremost advantage of the Firebase [4].

4.10.5 Database Structure

The data we are calculating after processing the image is

- Total Spaces

- Empty Spaces

- Occupied Space

- Total Cars

- Correct Parked Car

- Wrong Parked Car

- Output Image

Fig 4.31 Data Stored in Realtime Database

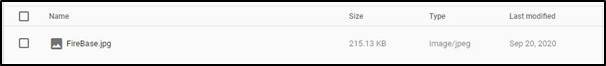

4.10.6 Firebase storage

Cloud Storage is built to store and serve user-generated content, such as photos or videos. Cloud Storage for Firebase is a powerful, simple, cost-effective object storage service built for the Google scale. The Firebase SDKs for Cloud Storage add Google security to file uploads and downloads for your Firebase apps, regardless of network quality. You can use our SDKs to store images, audio, video, or other user-generated content [5]

Firebase SDKs is used for Cloud Storage to upload and download files directly from clients. If the network connection is poor, the client is able to retry the operation right where it left off, saving your users time and bandwidth. Cloud Storage stores your files in a Google Cloud Storage bucket, making them accessible through both Firebase and Google Cloud. This allows you the flexibility to upload and download files from mobile clients via the Firebase SDKs [5]

In the Vehicle management system using Image processing, the firebase storage is used to store the final output image which will be shown to the user.

4.10.7 Configuring Application with Firebase

When you create a project on firebase, it provides you the facility to connect it with your website or your application. In order to connect Firebase with website the firebase project create a special script that is used for accessing the firebase database, firestore etc. The script contains all the confidential details of your project if someone knows that they can easily access and manipulate your project database or settings of your project. The script generated by firebase contains the following information:

- Api key of your project

- Auth domain

- Database URL

- Project Id

- StorageBucket

- Messaging SenderId

- Messaging SenderId

- App Id

CHAPTER 5 – User Interface

The Final Outcome of our project is a mobile application that provides Realtime parking space Detection that facilitates the users. The IDE was used to develop the application Android Studio and Java SDK.

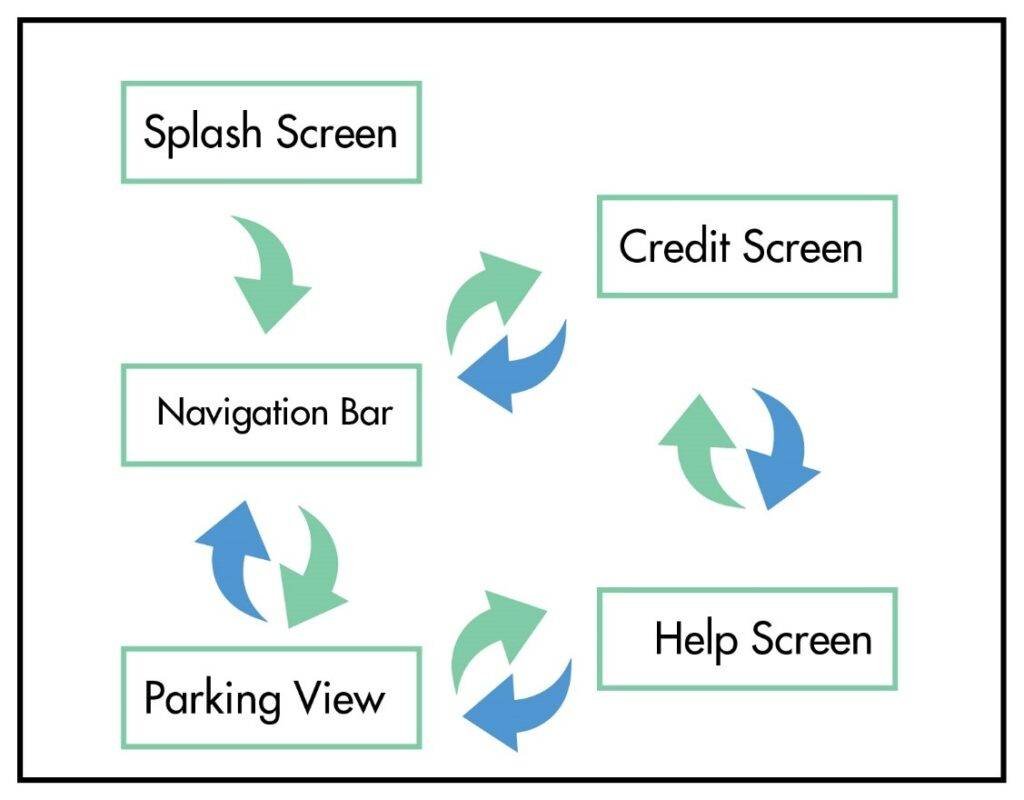

The Control Flow of the Mobile application is depicted as follows:

5.1 User Application

5.1.1 Splash Screen

It is the very first screen that will show up on opening the application.

Fig 5.2 Splash Screen

5.1.2 Home Screen

On the Home Screen user will be able to see the Parking Lot with Imaginary lines though which s/he is able to determine whether any slot is vacant or not; the red box means the parking slot is occupied; if green, then the parking slot is vacant.

5.1.3 Credit Screen

Users can see the Credit Screen from the navigation bar, where the user will be able to see the developer, the internal and project Supervisor details, and contact.

5.1.4 Help Screen

Users can see the Help screen from the navigation bar, where the user will be able to check the basics details how to park the car, how to check is the parking slot is vacant or occupied.

5.2 Admin Application

Admin Application has full rights to see the maximum number of vehicles that can be parked, Reserved slots, Vacant slots, and the cars which are wrongly parked.

5.2.1 Splash Screen

It is the very first screen, which is exactly similar to the user Application

5.2.2 Login Page

In order to authenticate, the admin Email and Password would be inserted so that Admin can check the status of the parking lot.

5.2.3 Home Screen

Once the Admin enter to the system it will see the home screen, with blank information once it hit the show button where admin will be able to see the picture and the Realtime information of the parking. Whereas, if admin want to change the password, it can be possible by clicking onto the change password button where admin will be asked for old password then new password and have to write the password again to confirm, if it hits the button save changes the password will be changed.

5.3 Updating Data

COVID-19 is one of the harmful viruses which impacted Pakistan, and Govt. announced a complete lockdown, due to which we did not have live access to our camera. In order to make an efficient project, we have used Tkinter to upload random images from the data set we gathered or created that will processed and store the processed information in the real-time database Firebase with the image.

When we press the Start button, a random image will be selected and processed, and after a few seconds, a new random image will be selected and uploaded it will go on until we stop.

Fig 5.11 Uploading Data using Tkinter

Once we press the stop button, the data we are uploading will stop.

Selection of the image then processing of the image, and at final uploading, the information related to it is done via thread-level parallelism in order to have an efficient speed.

CHAPTER 6 – Conclusion

The Final Version of our project is a mobile application offering a startup product in the management sector that aims to address the parking difficulty issue at mega-events where vehicles have to be parked is temporary parking where no such arrangements are made. The vision-based parking management system features to have maximum parking within the region of interest and facilitates the user with the best. The user can have a real-time parking lot update to see if there is any vacant space available to park the car. Since most people are in a hurry and park their car in the wrong way. In order to track it out, we have an admin application where the admin can check into the system for any wrong parking, the total number of vacant spaces, the Number of correctly parked cars, and other details. For Example, Pakistan hosts PSL matches, for which they need a vast parking lot to facilitate the audience’s vehicles.

The management used to draw lines so that people could park their cars into the slot and install CCTV cameras to have security; the process took more than 2 to 3 days; they had to recreate the parking lines again every day. In order to facilitate the PSL administration, our mobile application can provide them the facility to get rid of the physical marking and use technology to have an imaginary parking line that can be accessed by the user via the application.

For future enhancement of the application, we have to install an IP Camera and DVR (Digital Video Recorder) to have a recording and an efficient result. Due to COVID-19, we don’t have live access; the application will be linked to live access in the future. Following are the limitations of the camera which we need to take care of

- If any Pink cart appears in the parking lot, the systems may get cash or performance get disturbed.

- The camera must be placed to a certain height, i.e., 25 ft above the ground, to cover the parking lot’s maximum area.

- The camera must be deployed at a certain space where weather conditions cannot affect it.

REFERENCES

[1] Houben, S.; Komar, M.; Hohm, A.; Luke, S. On-vehicle video-based parking lot recognition with fisheye optics. In Proceedings of the International IEEE Conference on Intelligent Transportation Systems, The Hague, The Netherlands, 6–9 October 2013; pp. 7–12.

[2] Hamada, K.; Hu, Z.; Fan, M.; Chen, H. Surround view-based parking lot detection and tracking. In Proceedings of the Intelligent Vehicles Symposium, Seoul, Korea, 28 June–1 July 2015; pp. 1106–1111.

[3] Suhr, J.K.; Jung, H.G. Automatic Parking Space Detection and Tracking for Underground and Indoor Environments. IEEE Trans. Ind. Electron. 2016, 63, 5687–5698.

[4] [Online]. Available: https://firebase.google.com/docs/database. [Accessed July. 12, 2019].

[5] https://firebase.google.com/

[6] https://en.wikipedia.org/wiki/Convolutional_neural_network

[7] https://towardsdatascience.com/